1-D prior toy example (RBF covariance)

Demonstration of prior error bounds for a 1-D toy example

In this notebook we will demonstrate the error bounds for the statFEM prior for the toy example introduced in oneDim. We first import some required packages.

[2]:

from dolfin import *

set_log_level(LogLevel.ERROR)

import numpy as np

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = (10,6)

# import required functions from oneDim

from statFEM_analysis.oneDim import mean_assembler, kernMat, cov_assembler

from scipy.stats import linregress

from scipy import integrate

from scipy.linalg import sqrtm

from tqdm import tqdm

import sympy; sympy.init_printing()

# code for displaying matrices nicely

def display_matrix(m):

display(sympy.Matrix(m))

The random forcing term has the following GP distribution:

where,

This forcing gives rise to the true solution mean and covariance functions:

where \(G(x,y)\) is the Green’s function for our problem:

(note: \(\Theta(x)\) is the Heaviside Step function). We now set up all these functions, noting that we use quadrature to compute \(c_u\).

[3]:

# set up true mean and f_bar

μ_star = Expression('0.5*x[0]*(1-x[0])',degree=2)

f_bar = Constant(1.0)

[4]:

# set up kernel functions for forcing f

l_f = 0.4

σ_f = 0.1

def c_f(x,y):

return (σ_f**2)*np.exp(-(x-y)**2/(2*(l_f**2)))

# translation invariant form of c_f

def k_f(x):

return (σ_f**2)*np.exp(-(x**2)/(2*(l_f**2)))

[5]:

# set up true cov function for solution

# compute inner integral over t

def η(w,y):

I_1 = integrate.quad(lambda t: t*c_f(w,t),0.0,y)[0]

I_2 = integrate.quad(lambda t: (1-t)*c_f(w,t),y,1.0)[0]

return (1-y)*I_1 + y*I_2

# use this function eta and compute the outer integral over w

def c_u(x,y):

I_1 = integrate.quad(lambda w: (1-w)*η(w,y),x,1.0)[0]

I_2 = integrate.quad(lambda w: w*η(w,y),0.0,x)[0]

return x*I_1 + (1-x)*I_2

We now set up a reference grid on which we will compare the true and statFEM covariance functions. We take a grid of length \(N=51\)

[6]:

# set up reference grid

N = 51

grid = np.linspace(0,1,N)

We now compute the true prior covariance on this reference grid.

[7]:

# get the true cov matrix and its square root

C_true = kernMat(c_u,grid,True,False)

tol = 1e-15

C_true_sqrt = np.real(sqrtm(C_true))

rel_error = np.linalg.norm(C_true_sqrt @ C_true_sqrt - C_true)/np.linalg.norm(C_true)

assert rel_error <= tol

We now set up a function to get the statFEM prior, for a FE mesh size \(h\), using functions from oneDim.

[8]:

# set up function to compute fem_prior

def fem_prior(h,f_bar,k_f,grid):

J = int(np.round(1/h))

μ = mean_assembler(h,f_bar)

Σ = cov_assembler(J,k_f,grid,False,True)

return μ,Σ

We now set up a function to compare the covariance functions on the reference grid. This function computes an approximation of the covariance contribution to the 2-Wasserstein distance discussed in oneDim.

[9]:

# function to compute cov error needed for the approximate wasserstein

def compute_cov_diff(C1,C_true,C_true_sqrt,tol=1e-10):

N = C_true.shape[0]

C12 = C_true_sqrt @ C1 @ C_true_sqrt

C12_sqrt = np.real(sqrtm(C12))

rel_error = np.linalg.norm(C12_sqrt @ C12_sqrt - C12)/np.linalg.norm(C12)

assert rel_error < tol

h = 1/(N-1)

return h*(np.trace(C_true) + np.trace(C1) - 2*np.trace(C12_sqrt))

With all of this in place we can now set up a function which computes an approximation of the 2-Wasserstein distance between the true and statFEM priors.

[10]:

def W(μ_fem,μ_true,Σ_fem,Σ_true,Σ_true_sqrt):

mean_error = errornorm(μ_true,μ_fem,'L2')

cov_error = compute_cov_diff(Σ_fem,Σ_true,Σ_true_sqrt)

cov_error = np.sqrt(np.abs(cov_error))

error = mean_error + cov_error

# error = np.sqrt(mean_error**2 + cov_error**2)

return error

We set up a range of \(h\) values on which to compute this error.

[11]:

# set up range of h values to use

h_range_tmp = np.linspace(0.25,0.02,100)

h_range = 1/np.unique(np.round(1/h_range_tmp))

np.round(h_range,2)

[11]:

array([0.25, 0.2 , 0.17, 0.14, 0.12, 0.11, 0.1 , 0.09, 0.08, 0.08, 0.07,

0.07, 0.06, 0.06, 0.06, 0.05, 0.05, 0.05, 0.05, 0.04, 0.04, 0.04,

0.04, 0.03, 0.03, 0.03, 0.03, 0.02, 0.02, 0.02])

We now compute the errors for this range of \(h\).

[12]:

set_log_level(LogLevel.ERROR)

[14]:

%%time

errors = []

for h in tqdm(h_range):

# obtain the fem prior for this value of h

μ, Σ = fem_prior(h,f_bar,k_f,grid)

# compute the error between the true and fem prior

error = W(μ,μ_star,Σ,C_true,C_true_sqrt)

# append to errors

errors.append(error)

100%|██████████| 30/30 [00:00<00:00, 46.23it/s]

CPU times: user 657 ms, sys: 3.44 ms, total: 660 ms

Wall time: 653 ms

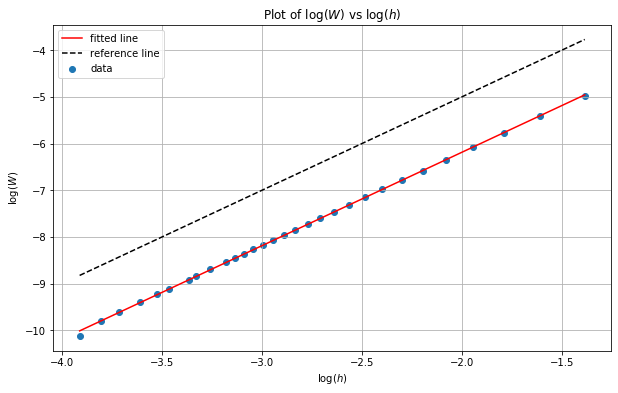

We now analyse the results by plotting the errors on a log-log scale and using ordinary least squares regression to estimate the slope of the line of best fit. This gives us an estimate of the rate of convergence as if we assume:

then we have

from which it follows that:

and so the slope is the rate of convergence \(p\). Our theory gives us \(p=2\) and so we should expect to obtain a slope of \(2\).

[15]:

errors = np.array(errors)

[16]:

log_h_range = np.log(h_range)

log_errors = np.log(errors)

# perform linear regression to get line of best fit for the log scales:

lm = linregress(log_h_range,log_errors)

print("slope: %f intercept: %f r value: %f p value: %f" % (lm.slope, lm.intercept, lm.rvalue, lm.pvalue))

# plot line of best fit with the data:

x = np.linspace(np.min(log_h_range),np.max(log_h_range),100)

plt.scatter(log_h_range,log_errors,label='data')

plt.plot(x,lm.intercept + lm.slope * x, 'r', label='fitted line')

plt.plot(x,-1+2*x,'--',c='black',label='reference line')

plt.grid()

plt.legend()

plt.xlabel('$\log(h)$')

plt.ylabel('$\log(W)$')

plt.title('Plot of $\log(W)$ vs $\log(h)$')

# plt.savefig('1D_prior_results.png',dpi=300,bbox_inches='tight',facecolor="w")

plt.show()

slope: 2.000523 intercept: -2.184977 r value: 0.999881 p value: 0.000000

We can see from the results above that we indeed obtain a slope of \(p=2\) for this example.