2-D prior toy example (RBF covariance)

Demonstration of prior error bounds for a 2-D toy example

In this notebook we will demonstrate the error bounds for the statFEM prior for the toy example introduced in twoDim. We first import some required packages.

[1]:

from dolfin import *

set_log_level(LogLevel.ERROR)

import numpy as np

import numba

import matplotlib.pyplot as plt

plt.rcParams['figure.figsize'] = (10,6)

# import required functions from twoDim

from statFEM_analysis.twoDim import mean_assembler, kernMat, cov_assembler

from scipy.linalg import sqrtm

import sympy; sympy.init_printing()

from tqdm import tqdm

# code for displaying matrices nicely

def display_matrix(m):

display(sympy.Matrix(m))

Since we do not have ready access to a solution operator for this problem we will utilise a different approach to demonstrate the appropriate rate for the error bounds. This approach involves comparing the statFEM distributions on finer and finer meshes as follows. We first assume we have the following error bound:

From this we have:

The triangle inequality then yields:

And so with the above we obtain:

Similarly we have:

Dividing the two above equations yields:

From which it follows:

Note that this ratio above does not rely on having access to the true prior \(\nu_{\star}\).

We will thus compare the errors on a succesively refined mesh and take the base-2 log of the above ratio. We hope to see these logarithms converge to \(p=2\).

We now set up the mean and kernel functions for the random forcing term \(f\).

[2]:

# set up mean and kernel functions

f_bar = Constant(1.0)

l_f = 0.4

σ_f = 0.1

@numba.jit

def k_f(x):

return (σ_f**2)*np.exp(-(x**2)/(2*(l_f**2)))

We now set up a function to get the statFEM prior for a FE mesh size \(h\), using functions from twoDim.

[3]:

def fem_prior(h,f_bar,k_f,grid):

J = int(np.round(1/h))

μ = mean_assembler(h,f_bar)

Σ = cov_assembler(J,k_f,grid,False,True)

return μ,Σ

We now set up a reference grid on which we will compare the covariance matrices.

[4]:

N = 41

x_range = np.linspace(0,1,N)

grid = np.array([[x,y] for x in x_range for y in x_range])

We now set up a function to compare the covariance functions on the reference grid.

[5]:

def compute_cov_diff(C1,C2,tol=1e-10):

N = np.sqrt(C1.shape[0])

C1_sqrt = np.real(sqrtm(C1))

rel_error_1 = np.linalg.norm(C1_sqrt @ C1_sqrt - C1)/np.linalg.norm(C1)

assert rel_error_1 < tol

C12 = C1_sqrt @ C2 @ C1_sqrt

C12_sqrt = np.real(sqrtm(C12))

rel_error_12 = np.linalg.norm(C12_sqrt @ C12_sqrt - C12)/np.linalg.norm(C12)

assert rel_error_12 < tol

hSq = (1/(N-1))**2

return hSq*(np.trace(C1) + np.trace(C2) - 2*np.trace(C12_sqrt))

We now set up a function to compute the Wasserstein distance between the statFEM priors.

[6]:

def W(μ_1,μ_2,Σ_1,Σ_2):

mean_error = errornorm(μ_1,μ_2,'L2')

cov_error = compute_cov_diff(Σ_1,Σ_2)

cov_error = np.sqrt(np.abs(cov_error))

error = mean_error + cov_error

return error

In the interests of memory efficiency we will now create a function which will compute the ratios of errors mentioned above by succesively refining the FE mesh.

[7]:

def refine(h,n,f_bar,k_f,grid):

# set up empty lists to hold h-values and errors (this being the ratios)

h_range = []

errors = []

# get the statFEM prior for h and h/2

μ_1, Σ_1 = fem_prior(h,f_bar,k_f,grid)

μ_2, Σ_2 = fem_prior(h/2,f_bar,k_f,grid)

# compute the distance between these and store in numerator variable

numerator = W(μ_1,μ_2,Σ_1,Σ_2)

# succesively refine the mesh by halving and do this n times

for i in tqdm(range(n), desc="inner loop", position=1, leave=False):

# store mean and cov for h/2 in storage for h

μ_1, Σ_1 = μ_2, Σ_2

# in storage for h/2 store mean and cov for h/4

μ_2, Σ_2 = fem_prior(h/4,f_bar,k_f,grid)

# compute the distance between the priors for h/2 and h/4

# and store in denominator variable

denominator = W(μ_1,μ_2,Σ_1,Σ_2)

# compute the ratio and store in error

error = numerator/denominator

# append the current value of h and the ratio

h_range.append(h)

errors.append(error)

# store denominator in numerator and halve h

numerator = denominator

h = h/2

# return the list of h-values together with the ratios for these values

return h_range,errors

Note:

The above function will compute the ratio \(\frac{W(\nu_{h},\nu_{h/2})}{W(\nu_{h/2},\nu_{h/4})}\) for the values \(h,h/2,\cdots,h/2^{n-1}\).

We now set up a list of starting \(h\) values and number of refinements \(n\) to get a decent number of ratios to approximate \(p\).

[8]:

my_list = [(0.25,4),(0.2,3),(0.175,3),(0.22,4),(0.176,3),(0.177,3),(0.178,3),(0.179,3),(0.18,3),(0.21,3),(0.215,3),(0.1,2),(0.3,4),(0.31,4),(0.315,4),(0.24,4),(0.245,4),(0.25,4),(0.14,2),(0.16,2),(0.15,2),(0.18,2)]

Warning:

As we are now in 2-D the matrices needed to compute the statFEM prior get very large in size as \(h\) decreases. Due to memory constraints we must thus make sure \(h\) does not get too small.

We now compute the results:

[9]:

set_log_level(LogLevel.ERROR)

[10]:

n_outer=len(my_list)

[12]:

%%time

h_range = []

errors = []

for i,(h,n) in tqdm(enumerate(my_list), desc="outer", position=0):

h_range_tmp, errors_tmp = refine(h,n,f_bar,k_f,grid)

h_range.extend(h_range_tmp)

errors.extend(errors_tmp)

outer: 0it [00:00, ?it/s]

inner loop: 0%| | 0/4 [00:00<?, ?it/s]

inner loop: 25%|██▌ | 1/4 [01:47<05:21, 107.30s/it]

inner loop: 50%|█████ | 2/4 [03:34<03:34, 107.19s/it]

inner loop: 75%|███████▌ | 3/4 [05:32<01:52, 112.05s/it]

inner loop: 100%|██████████| 4/4 [10:27<00:00, 184.29s/it]

outer: 1it [12:15, 735.36s/it]

inner loop: 0%| | 0/3 [00:00<?, ?it/s]

inner loop: 33%|███▎ | 1/3 [01:48<03:37, 108.93s/it]

inner loop: 67%|██████▋ | 2/3 [03:41<01:51, 111.29s/it]

inner loop: 100%|██████████| 3/3 [05:49<00:00, 118.89s/it]

outer: 2it [19:53, 572.34s/it]

inner loop: 0%| | 0/3 [00:00<?, ?it/s]

inner loop: 33%|███▎ | 1/3 [01:51<03:42, 111.11s/it]

inner loop: 67%|██████▋ | 2/3 [03:55<01:59, 119.13s/it]

inner loop: 100%|██████████| 3/3 [06:17<00:00, 129.34s/it]

outer: 3it [28:01, 533.81s/it]

inner loop: 0%| | 0/4 [00:00<?, ?it/s]

inner loop: 25%|██▌ | 1/4 [01:50<05:30, 110.00s/it]

inner loop: 50%|█████ | 2/4 [03:40<03:40, 110.35s/it]

inner loop: 75%|███████▌ | 3/4 [05:40<01:54, 114.63s/it]

inner loop: 100%|██████████| 4/4 [12:40<00:00, 235.19s/it]

outer: 4it [42:31, 666.49s/it]

inner loop: 0%| | 0/3 [00:00<?, ?it/s]

inner loop: 33%|███▎ | 1/3 [01:50<03:40, 110.29s/it]

inner loop: 67%|██████▋ | 2/3 [03:42<01:51, 111.64s/it]

inner loop: 100%|██████████| 3/3 [06:05<00:00, 126.01s/it]

outer: 5it [50:32, 599.50s/it]

inner loop: 0%| | 0/3 [00:00<?, ?it/s]

inner loop: 33%|███▎ | 1/3 [01:51<03:42, 111.49s/it]

inner loop: 67%|██████▋ | 2/3 [03:35<01:46, 106.85s/it]

inner loop: 100%|██████████| 3/3 [05:37<00:00, 114.13s/it]

outer: 6it [57:58, 547.59s/it]

inner loop: 0%| | 0/3 [00:00<?, ?it/s]

inner loop: 33%|███▎ | 1/3 [01:45<03:31, 105.72s/it]

inner loop: 67%|██████▋ | 2/3 [03:33<01:46, 106.85s/it]

inner loop: 100%|██████████| 3/3 [05:38<00:00, 115.16s/it]

outer: 7it [1:05:22, 513.63s/it]

inner loop: 0%| | 0/3 [00:00<?, ?it/s]

inner loop: 33%|███▎ | 1/3 [01:48<03:37, 108.50s/it]

inner loop: 67%|██████▋ | 2/3 [03:36<01:48, 108.13s/it]

inner loop: 100%|██████████| 3/3 [05:46<00:00, 118.31s/it]

outer: 8it [1:12:54, 494.04s/it]

inner loop: 0%| | 0/3 [00:00<?, ?it/s]

inner loop: 33%|███▎ | 1/3 [01:47<03:35, 107.93s/it]

inner loop: 67%|██████▋ | 2/3 [03:36<01:48, 108.48s/it]

inner loop: 100%|██████████| 3/3 [05:45<00:00, 117.62s/it]

outer: 9it [1:20:27, 481.20s/it]

inner loop: 0%| | 0/3 [00:00<?, ?it/s]

inner loop: 33%|███▎ | 1/3 [01:47<03:35, 107.57s/it]

inner loop: 67%|██████▋ | 2/3 [03:30<01:44, 104.66s/it]

inner loop: 100%|██████████| 3/3 [05:22<00:00, 107.93s/it]

outer: 10it [1:27:38, 465.73s/it]

inner loop: 0%| | 0/3 [00:00<?, ?it/s]

inner loop: 33%|███▎ | 1/3 [01:47<03:34, 107.42s/it]

inner loop: 67%|██████▋ | 2/3 [03:36<01:48, 108.35s/it]

inner loop: 100%|██████████| 3/3 [05:31<00:00, 111.46s/it]

outer: 11it [1:34:52, 455.90s/it]

inner loop: 0%| | 0/2 [00:00<?, ?it/s]

inner loop: 50%|█████ | 1/2 [01:53<01:53, 113.28s/it]

inner loop: 100%|██████████| 2/2 [04:03<00:00, 123.06s/it]

outer: 12it [1:40:49, 425.90s/it]

inner loop: 0%| | 0/4 [00:00<?, ?it/s]

inner loop: 25%|██▌ | 1/4 [01:52<05:38, 112.92s/it]

inner loop: 50%|█████ | 2/4 [03:46<03:46, 113.44s/it]

inner loop: 75%|███████▌ | 3/4 [05:45<01:55, 115.66s/it]

inner loop: 100%|██████████| 4/4 [08:50<00:00, 143.07s/it]

outer: 13it [1:51:29, 490.56s/it]

inner loop: 0%| | 0/4 [00:00<?, ?it/s]

inner loop: 25%|██▌ | 1/4 [01:55<05:46, 115.36s/it]

inner loop: 50%|█████ | 2/4 [03:52<03:53, 116.62s/it]

inner loop: 75%|███████▌ | 3/4 [05:55<01:59, 119.46s/it]

inner loop: 100%|██████████| 4/4 [08:53<00:00, 142.30s/it]

outer: 14it [2:02:19, 538.73s/it]

inner loop: 0%| | 0/4 [00:00<?, ?it/s]

inner loop: 25%|██▌ | 1/4 [01:50<05:30, 110.06s/it]

inner loop: 50%|█████ | 2/4 [03:44<03:45, 112.73s/it]

inner loop: 75%|███████▌ | 3/4 [05:40<01:54, 114.30s/it]

inner loop: 100%|██████████| 4/4 [08:35<00:00, 137.98s/it]

outer: 15it [2:12:40, 563.63s/it]

inner loop: 0%| | 0/4 [00:00<?, ?it/s]

inner loop: 25%|██▌ | 1/4 [01:54<05:42, 114.04s/it]

inner loop: 50%|█████ | 2/4 [03:45<03:45, 112.76s/it]

inner loop: 75%|███████▌ | 3/4 [05:41<01:54, 114.24s/it]

inner loop: 100%|██████████| 4/4 [10:20<00:00, 179.00s/it]

outer: 16it [2:24:54, 614.78s/it]

inner loop: 0%| | 0/4 [00:00<?, ?it/s]

inner loop: 25%|██▌ | 1/4 [01:47<05:22, 107.53s/it]

inner loop: 50%|█████ | 2/4 [03:34<03:34, 107.43s/it]

inner loop: 75%|███████▌ | 3/4 [05:29<01:50, 110.69s/it]

inner loop: 100%|██████████| 4/4 [09:37<00:00, 164.91s/it]

outer: 17it [2:36:15, 634.89s/it]

inner loop: 0%| | 0/4 [00:00<?, ?it/s]

inner loop: 25%|██▌ | 1/4 [01:49<05:29, 109.90s/it]

inner loop: 50%|█████ | 2/4 [03:37<03:36, 108.43s/it]

inner loop: 75%|███████▌ | 3/4 [05:31<01:50, 110.99s/it]

inner loop: 100%|██████████| 4/4 [09:27<00:00, 160.23s/it]

outer: 18it [2:47:28, 646.31s/it]

inner loop: 0%| | 0/2 [00:00<?, ?it/s]

inner loop: 50%|█████ | 1/2 [01:44<01:44, 104.31s/it]

inner loop: 100%|██████████| 2/2 [03:36<00:00, 109.13s/it]

outer: 19it [2:52:50, 548.82s/it]

inner loop: 0%| | 0/2 [00:00<?, ?it/s]

inner loop: 50%|█████ | 1/2 [01:47<01:47, 107.43s/it]

inner loop: 100%|██████████| 2/2 [03:50<00:00, 116.67s/it]

outer: 20it [2:58:32, 486.83s/it]

inner loop: 0%| | 0/2 [00:00<?, ?it/s]

inner loop: 50%|█████ | 1/2 [01:55<01:55, 115.98s/it]

inner loop: 100%|██████████| 2/2 [03:51<00:00, 115.75s/it]

outer: 21it [3:04:21, 445.29s/it]

inner loop: 0%| | 0/2 [00:00<?, ?it/s]

inner loop: 50%|█████ | 1/2 [01:59<01:59, 119.45s/it]

inner loop: 100%|██████████| 2/2 [03:59<00:00, 119.97s/it]

outer: 22it [3:10:17, 518.95s/it]

CPU times: user 3h 7min 41s, sys: 1min 3s, total: 3h 8min 45s

Wall time: 3h 10min 17s

We now sort these results by \(h\) and get rid of duplicates (from repeats in \(h\)-values).

[13]:

h_range_array = np.array(h_range)

errors_array = np.array(errors)

[14]:

argInd = np.argsort(h_range_array)

hs = h_range_array[argInd]

es = errors_array[argInd]

hs,hs_ind = np.unique(hs,return_index=True)

es = es[hs_ind]

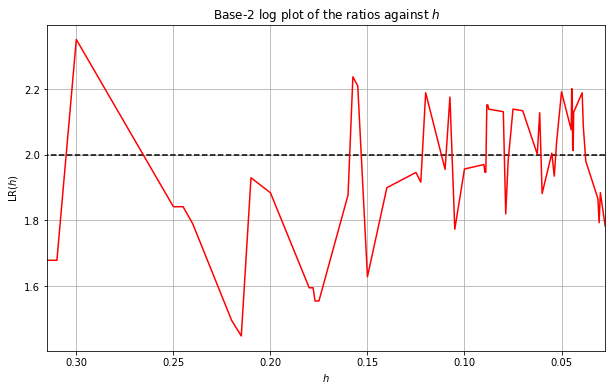

We now plot the base-2 logarithm of the ratios against \(h\) below:

[15]:

plt.plot(hs,np.log2(es),c='r')

plt.xlabel('$h$')

plt.ylabel('$\operatorname{LR}(h)$')

plt.title('Base-2 log plot of the ratios against $h$')

plt.hlines(2.0,hs[0],hs[-1],colors='black',linestyles='--')

plt.xlim(hs[-1],hs[0])

plt.grid()

plt.show()

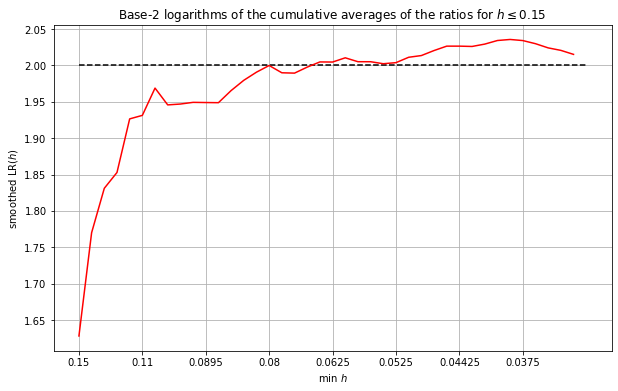

We can see from the above plot that the logarithms seems to be approaching \(p=2\) as \(h\) gets small just as predicted. However, the results aren’t that smooth and they haven’t seemed to settle on \(p=2\) yet. This can be due to memory constraints meaning we cannot use small enough \(h\). We thus smooth the above results by taking a cumulative average of the ratios and then applying \(\log_2\). We take the rolling average starting with large \(h\). We choose a cut-off point of \(h=0.15\), i.e. we discard any results for \(h>0.15\).

The results are shown below:

[17]:

# get the cumulative average of es:

i = -19

ES = es[:i]

HS = hs[:i]

pos = np.arange(0,40,step=5)

es_av = np.log2(np.cumsum(ES[::-1])/np.arange(1,len(ES)+1))

plt.plot(es_av,label='log_of_cum_av',c='r')

plt.xticks(pos,HS[::-1][pos])

plt.hlines(2.0,0,len(es_av),colors='black',linestyles='--')

plt.title('Base-2 logarithms of the cumulative averages of the ratios for $h\leq 0.15$')

plt.xlabel('min $h$')

plt.ylabel('smoothed $\operatorname{LR}(h)$')

plt.grid()

plt.show()

From the smoothed results above we can see more clearly that the ratios are converging to around \(p=2\). In fact, discarding the values corresponding to \(h>0.15\) seems to result in the the rolling average converging to a value slightly greater than \(2\).